Why is it that volume interpretation of 3D seismic data is not practiced by seismic interpreters, though 3D seismic data is used by most oil companies for getting detailed information for the reservoirs? This is one question that most geophysicists at time wonder about. We decided to feature this question for our Expert Answers column, this month.

The Experts answering the question are Terry Zwicker (Samson Canada Ltd., Calgary), Ken Mitchell (K A Projects Ltd., Calgary), Doug Pruden (Nose Creek Geophysical Inc., Calgary), and Barrie Jose (Sproule Associates Ltd., Calgary).

The order of the responses given below is the order in which we received them. We thank the experts for sending in their responses.

Question

Since the early 1990s 3D seismic has become the norm for oil companies and it has helped get clearer pictures and more detail of the reservoirs. Conventional seismic interpretation methods as applicable to 3D seismic volumes make use of 2-D inlines, crosslines and time slices. Visualization and volume interpretation of 3D seismic data has been suggested since many years. However, it has been observed that the adoption or the regular use of these techniques has not spread to the geoscientists, who are still engaged in doing section-based interpretation even for 3D volumes. This is true even for those companies where all the data used is 3D seismic.

In your expert opinion, what are the reasons for this lack of adoption of such newer techniques?

Answer 1

Calgary oil and gas companies that I interact with have elected, over the past five years, to use Window-based software to meet their interpretative needs. There f o re, I will qualify my comments within the parameters of the geophysical interpretive experience using Windows-based visualization and volume interpretation software.

Anyone that has attended geophysical conventions, geophysical technical talks and perused our trade journals for the last few years can not avoid being impressed by the delightful and compelling images that are frequently on display. Quite often, these data volumes are rendered in such a manner that the earth’s secrets are elegantly exposed or the information has been manipulated such that previously hidden knowledge is laid bare and obvious for all to see. However the reality on the streets of Calgary, as I deal with other companies is that this type of analysis is not the norm for our industry.

The rank and file interpreter is always pushing to image the next prospect utilizing 3D data to mitigate the risks of trap definition and reservoir. Where is that reef edge? How does my structure plunge? Is that amplitude really porosity? This risk mitigation is primarily done in the time honoured method of calibrating seismic to well control and then correlating between vertical time slices. Isochron, structure and/or amplitude extraction maps can be generated producing the information that is needed.

All this is accomplished using 2-D images and this is because of the technology that we use. It is the mature technology of the standard seismic interpretative systems that dominate our geoscience interpretative efforts in today’s industry. And for good reason! The standard “picking and mapping” software on our desk today is very close to the ideal product that we only dreamed about ten years ago. A geoscientist can interpret large amounts of data in a relatively short period of time on a very stable platform that is reasonably intuitive to master. Today’s interpreter can easily calibrate and integrate the seismic data with the pertinent engineering and geological information and top it off with a layer from the mineral rights database. And all this can be had for a relatively low cost. Afar cry from the ruler and pencil maps of yesterday.

In comparison, the immature nature of the visualization and volume interpretation software became apparent when our company went shopping for some Window-based visualization software 16 months ago. At that time, our geophysical staff narrowed down the alternatives to three products that seemed viable. These vendors were given a seismic data base (three 2-D lines and a 3D) and a well database, including digital logs, to load into their visualization software. Each software product had its performance ranked in a matrix of ten weighted criteria that was developed by our geophysical team. Four of the criteria categories (software support, horizon picking, cube visualization and attribute classification) were deemed to be a ‘critical and required functionality’. When the dust settled, our consensus at that time was that none of the three products filled our minimum expectations. As might be expected each product demonstrated individual strengths and weaknesses. The list of some of the deficiencies that were shared to a certain degree by one or more these products included:

- Effectively unable to correlate seismic to well logs

- Difficulty importing data

- Inability to slice relative to horizon

- Weak horizon picker

- Effectively unable to attribute classify

- Limited ability to integrate land and engineering data

- Complex data manipulation and workflow

Our evaluation showed that each product had its basic flaws, but conversely each product showed added interpretative capability that our company could utilize. This result is consistent with what one would expect with immature and evolving software. In conversing with colleagues in other companies, I have found that these are fairly typical conclusions.

In spite of these deficiencies, we decided not to wait a couple of years for the ‘finished mature version’. Instead, we elected to lease one of the products so that we could start our climb up the learning curve for visualization and volume interpretation software. The consensus of geoscientists in our company is that we need to access the offered capabilities of the visualization software in order to provide that necessary competitive edge. In practise, a significant number of interpreters take the time to test drive the software on a couple of projects. But only a few become proficient enough that they regularly use the visualization software as a standard tool in the interpretative process.

The reality is that ours is a competitive and time-sensitive, deadline driven industry. I suspect that the average interpreter has been spoiled by the maturity of the standard seismic interpretative systems that they presently use and is not tolerant enough to access the additional capabilities of the evolving visualization and volume interpretation software that is available. The truth is that it is relatively difficult to calibrate your seismic to well logs and there is difficulty getting the same integration with geology, land and engineering that can be achieved presently on your workstation. Project management of all the various volumes that can be generated is daunting for the novice user who can become lost in the many-layered directory tree “forest” that quickly proliferates. Typically, a geoscientist’s reluctance to use these techniques can be traced to the limitations of the immature software and the complexity of the software’s interface.

The present generation of visualization software is not yet the user- friendly, all purpose Leatherman tool that you may desire. That said, it is the tool technology of our near-future. As I sit on the low end of my learning curve, I have found the product useful as a reconnaissance tool at the front end of my 3D interpretative process. The idea is to let the seismic data drive your interpretation at the start. ‘Let the data speak to you’ prior to investing a large amount of time correlating and horizon picking.

Traditionally, 3D seismic volumes in a complicated fault regime can result in laborious and frustrating attempts (read weeks and months) to correlate the individual fault planes. Utilising our visualization software on a dip azimuth volume at the start of interpreting a 400 sq. km 3D, permitted the data to quickly show the presence of 3 different types of fault patterns and also gave a first estimate on the timing of the structural movement. The task is definitely easier if the fault patterns are resolved prior to picking horizons in order that you can interpret within a fault compartment.

The first 3D that was loaded into our newly leased visualization software had been ‘horizon picked’ and mapped during the previous month. The true nature of the primary play-type of interest had started to become evident roughly two weeks into the interpretative process. An isochron map created at the end of the month long process clearly showed a dendritic-shaped incision cutting diagonally through the zone of interest resulting in a number of trapping configurations. This same understanding of the play-type was realised in a mere 30 minutes using the visualization software to fly through a similarity volume. The ability to have this play-type hypothesis when you initiate the interpretation process would be invaluable because it allows the interpreter to address all the appropriate interpretative issues as the interpreting progresses. In one instance, the isochron map erroneously placed a well with a full section of reservoir within the erosional incision. Whereas the initial view with the visualization software clearly showed the well correctly situated 180 m. back from the erosional edge. Visualization techniques permit the objectivity of the seismic data to influence the interpretation prior to imposing your subjective interpretation.

The Windows-based visualization and volume interpretation software presently on the market is not as user friendly as our standard seismic interpretative workstations currently on our desk tops, but it is quickly evolving as the newest versions are released. The rank and file interpreter can immediately realise enormous benefits by adding this capability to his/her interpretation toolbox.

Terry Zwicker

Seismic Dude

Samson Canada Ltd.

Answer 2

To answer the question, I draw attention to several factors that influence how interpreters handle 3D data in their routine work in the real world. As is often the case, geophysicists must make some careful decisions about the best way to perform their work.

Scenario 1. The Stratigraphic Target in an Active Drilling Project.

First let’s consider a stratigraphic target. In voxel interpretation, the interpreter can do a very efficient reconnaissance scan through the 3D volume by adjusting time windows, opacity and colour palette. The interpreter probably already has an idea of the time zone of interest as well as some of the attribute criteria that will indicate an anomaly. Soon, several 3D perspectives are pre p a red that demonstrate ‘ gas sand ‘ or ‘reef’, ‘channel’ or ‘fan’ and so on. But even after this quick recon, the interpreter will still have to perform some more detailed work to tie these geobodies to well control, identify key horizons and probably make some time and depth maps. This all sounds great, and in principle it certainly is.

However, to get to this position, the interpreter has had to endure through many struggles. The computing platform to be used for this voxel and visualization application is most likely to be UNIX based and the data input, database management, network communication, interpretation module licensing and other assorted issues have all been resolved. This assumes that the voxel and visualization interpretation system is actually installed and available on the interpreter’s desk and that he has been trained and using the software enough to be dexterous with it. So there are a few hurdles to negotiate before an interpreter can enjoy the benefits of 3D voxel analysis.

Then there is the matter of data output. How is the interpreter going to display his results to geologic and engineering colleagues or to clients and management? A map would be useful, as would some key profiles and perhaps a 3D perspective of the target (invariably printed as a 2D image on paper with the requisite “N” arrow to give everyone their bearings). Oddly, this 3D interpretation all sounds very ‘2D’ in the final display. Of course, there is always the option of booking a visualization station (maybe in house, but probably off-site) and scheduling a meeting with all the players to brainstorm. There may be some discussion about the cost of the vis centre time, the current budget, the difficulty of scheduling and the fact that the manager / client may have to attend at the vis centre once some recommendations are ready. This doesn’t even consider that the client / management wants three drilling location recommendations within the 3D area yesterday.

Faced with some of these realities we must question ‘how much better is voxel interpretation and visualization’? Certainly it is more elegant than profile and time slice ‘2D” approaches, but what are the rewards?

For most interpreters, our key deliverable to clients and management is a well recommendation. The more maps-per-minute and locations- per-day that we produce, the happier are our benefactors. The “HOW” of 3D seismic interpretation has little impact on corporate numbers this fiscal quarter. Better 3D interpretation is really not of interest to those financing our drilling campaigns unless it results in several of the following:

- higher initial production rates

- increased ultimate hydrocarbon recovery

- timely recommendation to the drilling operations group

- more land area held through production or by simply drilling a well

- higher boepd exit rate

- increased reserve adds

- efficient rig movement and timely drilling of prospect inventory

Then consider that even the best 3D voxel workflow still results in an interpretation that is subject to uncertainly and error.

And what about this maligned 2D profile approach to 3D data volumes. Is it really so inferior? In profile, the interpreter can seed a few key horizons that frame the target zone and autopick them. Thereafter, our interpreter can create some horizon referenced time slices (horizon slices) and scan through a series of these looking for anomalous attributes. This scanning can even be directed to look at a horizon-referenced time window and then querying the number of attribute samples that are described by a certain threshold. This approach is certainly profile based, but it also provides a quick, voxel-like scan – without the need for a specialized 3D visualization system.

Such is my oversimplified example of the 3D interpretation for a stratigraphic target for an actively drilling client. In this scenario, I have suggested that the full 3D voxel interpretation is more time consuming and resource hungry than a profile based interpretation, and not necessarily any better. This has certainly been my experience to date on several projects, but I do believe that significant changes are on the way and I address this later in my vision of ‘the future of 3D interpretation’.

I contend that there is often little business incentive for interpreters to expand their technical toolboxes to include 3D voxel interpretation under this Scenario.

Scenario 2. The Structural Target in a High Profile Exploratory Project

Now let’s consider a structural target in a ‘Big E’ project (does anybody even remember these?!). In voxel interpretation, the interpreter can do a reconnaissance scan through the 3D volume by adjusting time windows, opacity and colour palette. The interpreter probably already has an idea of structural trend and the fault pattern, but visualization is a definite assist to sorting through the complexities of the fault blocks. Shallow geobodies give clues of the structural drape while deep seismic markers or geobodies provide a view of the geology (still in two-way-time) beneath detachment. Fault planes are investigated and tentatively connected, intersected and transferred. But even after this quick recon, the interpreter will still have to perform some more detailed work to tie geobodies to well control, identify and pick key horizons, and probably make some time, velocity and depth maps. Things are going great for this interpreter, but he needs a coffee after all this thinking in 3D.

In this structural scenario, the interpreter has had to endure many of the systems, database, loading, network communication and other issues that face the stratigraphic interpreter above. However, it seems that the structural explorer may reap some more dividends from his struggles.

Once the interpreter has created some initial maps of the structure, some detailed collaboration with the geologist would certainly be in order. In this process, the visualization display can be valuable as it allows the team to interactively test out their theories and models on the 3D data. After much spinning, brainstorming and 3D gyrating, it is inevitable that this geoscience team is going to stop and display a good old-fashioned seismic profile dip line just to get their equilibrium.

Meanwhile, the geologist excuses himself while he picks up some Gravol at the drugstore. Secretly, the geophysicist does some detail picking within the fault blocks in 2D profile mode. The geologist returns, a working hypothesis is established and the two geoscientists slap each other on the back and rejoice in the fact that ‘at least WE can think in 3D’.

After days and maybe weeks of 3D visualization, the geoscientists have an integrated interpretation and have consulted with a reservoir engineer. Now they are ready to talk with the drilling engineer to plan a 3 dimensional well course to the target, with some attention to secondary zones. Now the 3D visualization is really paying off. The engineers will declare, with much bravado, that they can deviate the well anyway that you want, but at a price. So decisions and compromises are necessary and the 3D visualization is a key tool to understanding and finalizing the optimum well course that will accomplish the exploration objective.

It is also likely that the 3D vis centre will get further use as the well is drilled to view the progress of the bit within the 3D data volume incorporating the latest directional survey data. Decisions to revise the well course or to whip may have to be made based on the latest data received from the well. Since the well is ‘Big E’, it is also high profile with the Executive of the company and the president and a director or two might want to visit the vis centre to check on progress.

This is a somewhat light hearted look at 3D interpretation for a structural exploratory target. In this scenario, I have suggested that the full 3D voxel interpretation is more appropriate, given the extra time and resources needed. This voxel and visualization interpretation may not necessarily be better than a profile based interpretation. However, the vis centre as a catalyst for team interaction and decisions is a plus.

It has certainly been my experience and I continue to have the perception that 3D visualization interpretation is most appropriate for more complex structural projects. I would also extend this opinion to the case of Reservoir Simulation where the full integration of a team of geologists, geophysicists, reservoir engineers, and drillers dissect a producing field and attempt to optimize development. In a Reservoir Simulation and Production Enhancement project, the full power of 3D voxel attributes, seismic facies, and geobody analysis can help to guide highly deviated, high productivity wells.

The Bottom Line

In Scenario 1. the interpreter has little business incentive to use 3D voxel and vis techniques if the client is focused on short term, bottom line measures of performance. If lucky, the interpreter may be able to demonstrate improved, measurable business performance of a drilling program, but at some risk of failing to provide timely locations.

In Scenario 2. the interpreter is probably dealing with a client that tolerates and sees benefit in a more advanced 3D interpretation. The interpreter is well advised to make use of 3D visualization, and perhaps voxel interpretation but to most certainly engage with his geologic and engineering colleagues to plan a better project. The benefits of a vis centre to demonstrate the complexities of the project to decision makers are considerable.

In the case of a Reservoir Simulation and Production Enhancement project, 3D voxel and vis tools are virtually an imperative. It is difficult to conceive any technical or business reason not to use the full power of these tools.

Considering the two scenarios and the reservoir simulation case, it is instructive to consider what most interpreters in our Industry do on a daily basis. In Canada, it is my estimation that more than 80% of the E&P geophysical interpreters are working under Scenario 1. Scenario 2 interpreters comprise maybe 10-15% of this group and only a handful of interpreters are involved with Reservoir Simulation projects. I’ll admit that my population estimates are not very scientific, but I think most would agree with my proportions.

From this, I conclude that many interpreters that consider 3D voxel interpretation and visualization do not have much motivation to expand their training and technical skills to their current project work. The business customers for their work are not inclined to reward the effort and may, in fact, have little tolerance for it if such work threatens short-term, bottom line targets. The minority of our colleagues have more incentive and support from business customers for advanced 3D interpretation.

The Future of 3D Interpretation

It is my opinion that 3D volume interpretation analysis is an inevitable way of the future. Already, interpreters are seeing the advent of new generation data interpretation systems that have great improvements in user interface and that are based on cheap, efficient and accessible PC platforms. Barriers to data loading, manipulation and output are beginning to fall. Pricing of these systems is moderating and performance is ever improving.

Many geophysicists, both the fossilized and the greenhorn, are beginning to actually think in 3D. The ready availability and diversity of attribute algorithms that can be mapped, crossplotted, clustered, ordered and otherwise manipulated has increased dramatically in the recent past. 3D visualization and interpretation is an obvious way that allows us to present all these different information domains in a logical and understandable format.

Further, some progressive thinking companies are starting to encourage and support their geophysicists to update their technical toolboxes. Strategically, these companies recognize that more thorough interpretations that include visualization and a variety of attributes are more likely to add to bottom line measurements, at least in the longer term.

Interpreters’ skills in advanced 3D interpretation are likely to grow. With more usage, tangible improvement in business results is likely. 3D workflows will streamline and become as efficient as profile methods, with reduced barriers to input and output.

I predict that this will lead interpreters to selecting the most appropriate interpretation tools for the project with less background noise regarding ‘HOW” they perform their work.

K. I. (Ken) Mitchell

K A PROJECTS LTD.

Calgary

Answer 3

Seismic visualization, when initially introduced to the industry, was acclaimed to be the next great advancement in seismic interpretation technology. Its usage, however, has long been restricted to a small segment of the interpreting community. It’s most widespread usage has occurred within the major exploration and production companies where substantial resources have been dedicated to it. These resources include large rooms with controlled lighting; large scale projectors, dedicated computer servers and dedicated technicians to operate the software while interdisciplinary teams debate the relative merits of multi-million dollar drilling locations. From its outset, the technology has been geared towards the high profile, large budget drilling program involving a host of geophysicists, geologists, engineers and managers. When first introduced, it was the “gee-wiz” technology that everyone wished they could play with; a similar “techno-envy” to that of 3D technology when it first appeared in the early 1980’s.

My argument for the lack of popular acceptance of 3D visualization technology is twofold: The first argument relates to the generation gap and the resistance of present day decision makers to embrace and endorse the technology. The second, derivative argument relates to the popularization of technology and my perception of the forces of market acceptance.

The lag between the introduction of a new technology and its universal acceptance seems to be between 10 and 20 years. As an example, the practical concept of working with AVO was introduced by Ostrander in the early 1980’s, yet the technique has only gained mainstream acceptance within the last few years. This is what I refer to as the natural technology gap. I think if we honestly look at it, new technologies such as AVO only become popular when a newer, younger generation of interpreters looks at the techniques with a fresh, unbiased viewpoint and asks the key question “How can I use this technology?” I submit that the majority of the old timers (20+ years of experience) tend to ask the question “What good is this technology?” While the questions are similar, the implied attitudes are quite different.

As a particular example, let us look at the process of synthetic seismogram correlation. The best theoretical way to tie a synthetic is to match the amplitude spectrum of the well impedance to the amplitude spectrum of the data and then adjust the phase of the data to tie to the known (usually zero) phase of the synthetic. Why is it, then, that the majority of seismic interpretation engines still attempt to mimic the old paper to paper correlation techniques used in the 1980’s? (a synthetic is generated with a bandpass and then visually matched to the data, without any real attempt to model the amplitude spectrum of the synthetic to the data or correct the phase of the data except by simple bulk phase shifts. The quality of the synthetic tie relies heavily on visual inspection.) While one technique is clearly technically superior, the inertia of popular usage forces an erstwhile technique to remain in existence. The former practice is clearly a vestige of paper interpretation techniques with which the modern generation of seismic interpreters is unfamiliar. I think this operational inertia is at the root of the question about the acceptance of new paradigms of seismic interpretation.

When seismic workstations were first introduced, a major selling point of some of the eventually most popular systems was the “ease-of-use” argument. The systems were designed to feel familiar to the seismic interpreter. Most often this was accomplished by mimicking the traditional paper world. If you doubt this, ask yourself why the most popular display on most interpreters’ workstations is a seismic variable area wiggle trace displayed at 7.5 inches per second and 15 traces per inch? Why is such a scale so common? Those of us old enough to remember realize that this was the most convenient scale and type of display used in the industry by seismic processing houses to display paper data. These types of display, or variants of it, are remnants of what a previous generation of seismic interpreters employed to “visualize” seismic data. While the “traditionally common” display is popular, many arguments can be introduced as to why other displays are superior. The modern generation of seismic interpreters should have no emotional attachment to any previously popular seismic data display as they will have grown up in a largely workstation dominated environment. Popular usage, however, has preserved displays that have no real reason be a standard in a workstation environment.

3D seismic visualization is a child of the computer era. It really has no “old school” equivalent and, as such, will be a new paradigm for many interpreters who share a paper dominated upbringing. Those best equipped to embrace such new technologies will be the interpreters who are most open to the possibilities that computer based interpretation provide. They will most often be the new generation of seismic interpreters; those brought up in the era of computer graphics and video game technology.

One of the reasons that seismic visualization technologies are not seizing the imagination of the interpretation community is the perception of the cost of “getting there”. Until someone popularizes the technology as vitally important, inexpensive, easy to understand tool that is easily implemented on a desktop, the old imagery of the “visualization center” will dominate the thinking of any interpreter considering the application. In fact, inexpensive (even gratis) desktop applications of such technology have existed for the past few years and continue to be developed. As an example, the European product, OpenDtect extensively employs visualization technology within a desktop environment. The difficulty is that such products are so far away from the conservative “mainstream” of software development, advocates of products like OpenDtect are akin to the user of the MacIntosh computer in an MSDOS dominated universe. Historically, the best technology does not always triumph in the commercial arena. Examples of this are the VHS vs. BETA Video recording formats or the triumph of the MSDOS based PC over the technically superior MacIntosh or AMIGA computers. In the end, technologies that are perceived to be more universally accepted seem to dominate a market, even if the alternative is technically superior, easier to employ and understand, and has a lower cost entry level. As such, major software vendors who regularly get their message out in lavish advertisements have maintained the higher profile within the industry and tend to endorse the misconception that only high end workstations are capable of employing visualization technology.

The final aspect that hampers the rapid, universal adoption of visualization technology involves the perception of present day decision makers within the industry that it is little more than eye candy for management. As visualization technology is currently used, the data must be familiar to the interpreter even prior to employment of visualization techniques. The zone of interest must be understood well enough for the interpreter to appreciate the nuances of the reflection response. This is especially true in basins such as the WCSB where most reservoir zones occur near the tuning thickness of the wavelet in the data. Visualization techniques, while helpful in initial evaluation of the data, do not realize their full potential until they are applied to a particular interpretation of the data. This chicken-egg scenario results in the data having been reviewed and visualized in the mind of the interpreter long before it is input to a visualization software package. For the experienced interpreter, such visualization technology serves as more of a confirmation of a developing interpretation than a tool to develop one.

Seismic visualization technology is an application that is at the forefront of seismic interpretation. Because it has no roots in the traditions of the current industry leaders, it will not have a dominant position in the work environment until the next generation (the Nintendo generation, if you will) become industry leaders and can influence the standards of practice. Seismic visualization is just a bit ahead of its time. In 10 years it will be more dominant, and we will be looking to the contributions of a subsequent generation to be accepted.

Doug Pruden

Nose Creek Geophysical Inc.

Calgary

Answer 4

The objectives of the newer 3D visualization techniques that set them apart from conventional 2D section-based (inline, crossline, or time-slice) interpretations are to optimally and realistically view complex subsurface data in a connected, intuitive, graphical fashion that the human senses can more easily grasp. This has become even more important as the varieties of data from various disciplines being integrated, and indeed, the multiplicity of various coincident 3D seismic attribute cubes generated for a single SEGY volume, increase. A 3D visualization system is the best way to rapidly investigate and see the inter-play of these multidisciplinary data. Many of the techniques I use routinely are on all but the simplest of projects.

To try and answer why the pace of adoption of these techniques has been slow, we must first summarize what some of these techniques are.

Some Newer Techniques

First, let us discuss some of the newer ways of analyzing data beyond conventional section-based interpretation.

The current visualization techniques can be broadly broken into two groups:

- 3D visualization of geometric surfaces such as traditional sections, horizon and fault planes.

- 3D volume-based visualization of “clouds” of semi-transparent seismic voxels which have been manipulated into formation-sculpted bodies, fault-sculpted bodies, or slabs of voxels of varying thicknesses and orientations.

3D Visualization of Geometric Surfaces and Sections

Conventional seismic workstations typically display section-based data as flat screen images of inlines, crosslines, and timeslices for interpretation, with the outcome typically presented as contour maps.

Most modern interpretation systems with 3D visualization capabilities display this same information within a 3D environment in which it is far easier for the human brain to comprehend the complex inter-relations of the surface and fault geometries, deviated well penetrations, amplitude or other attribute changes.

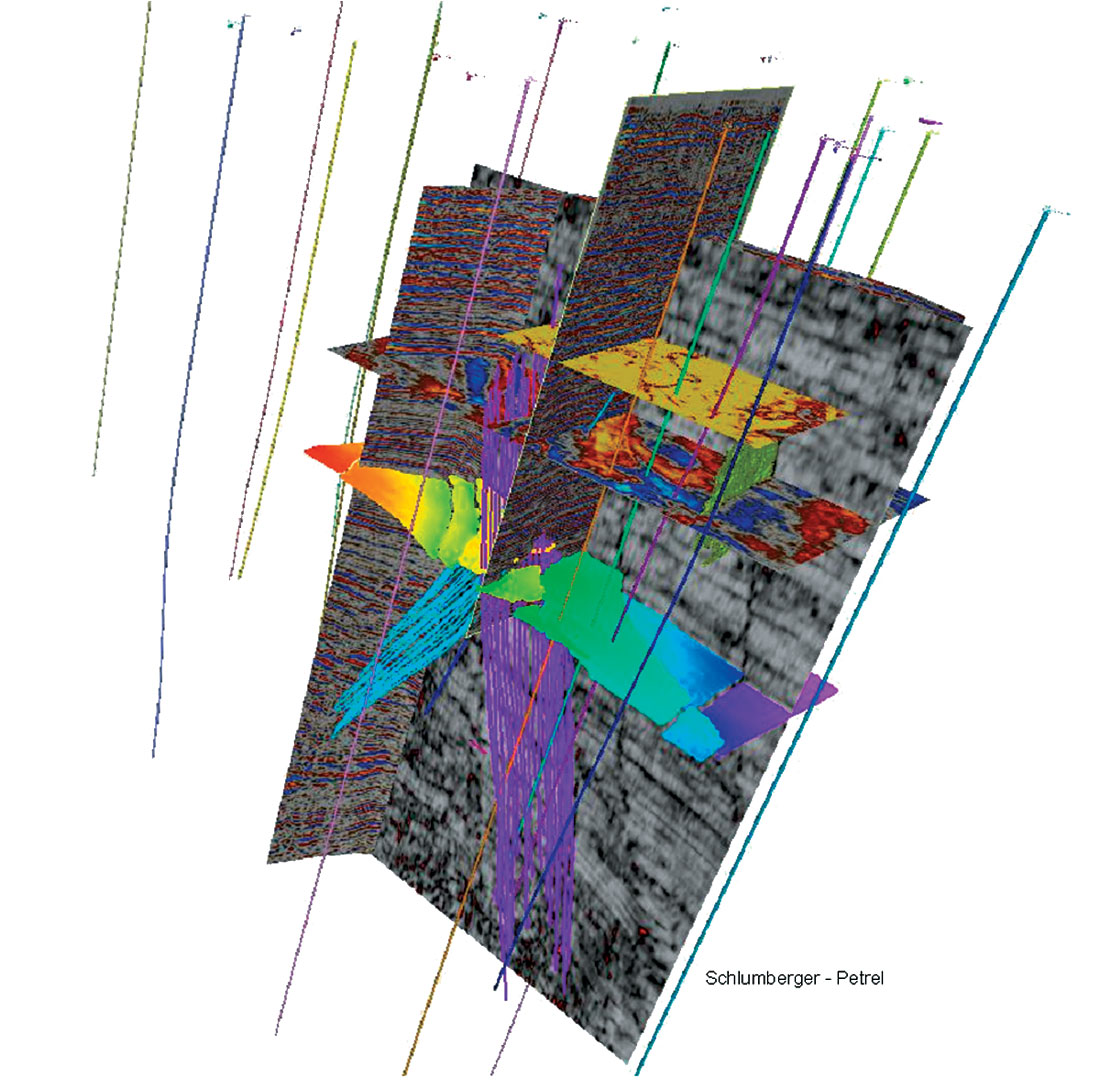

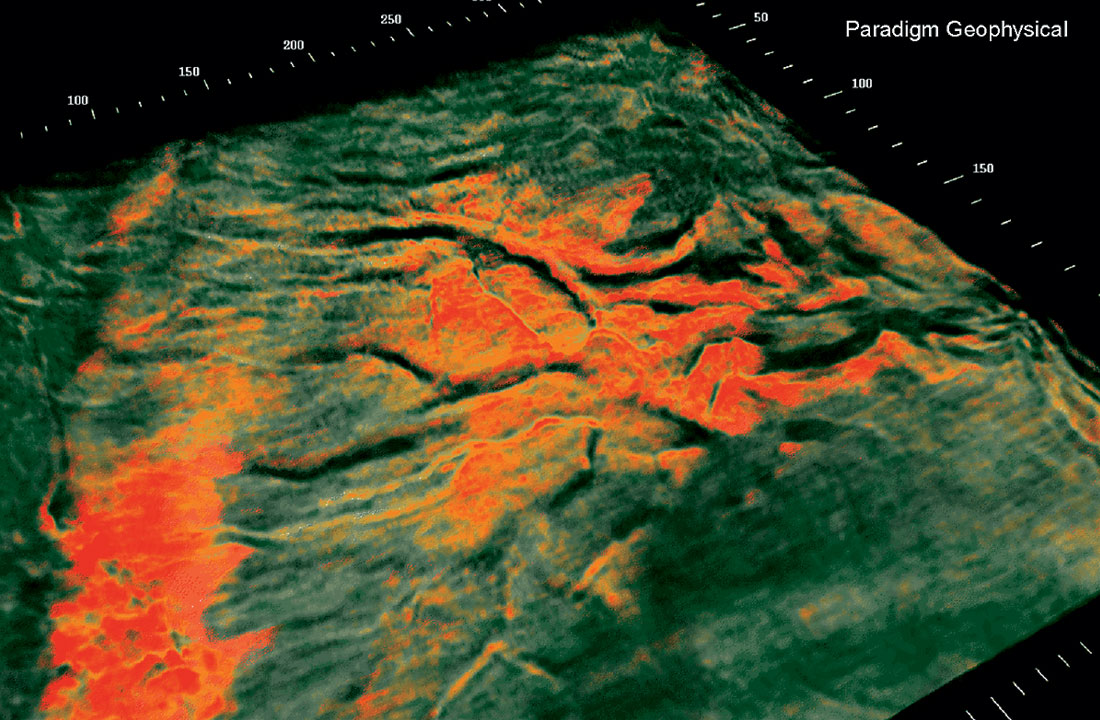

In Figure 1, combined traditional 2D section displays of both the migrated SEGY data and coherency data enable the interpreter to enhance the interpretation of faults while visualizing the impact upon the imbedded “geometric object”, the horizon. The interpreter can do a better and more efficient job because he better able to watch his interpretation “grow” and modify his conceptual thinking during the interpretation process.

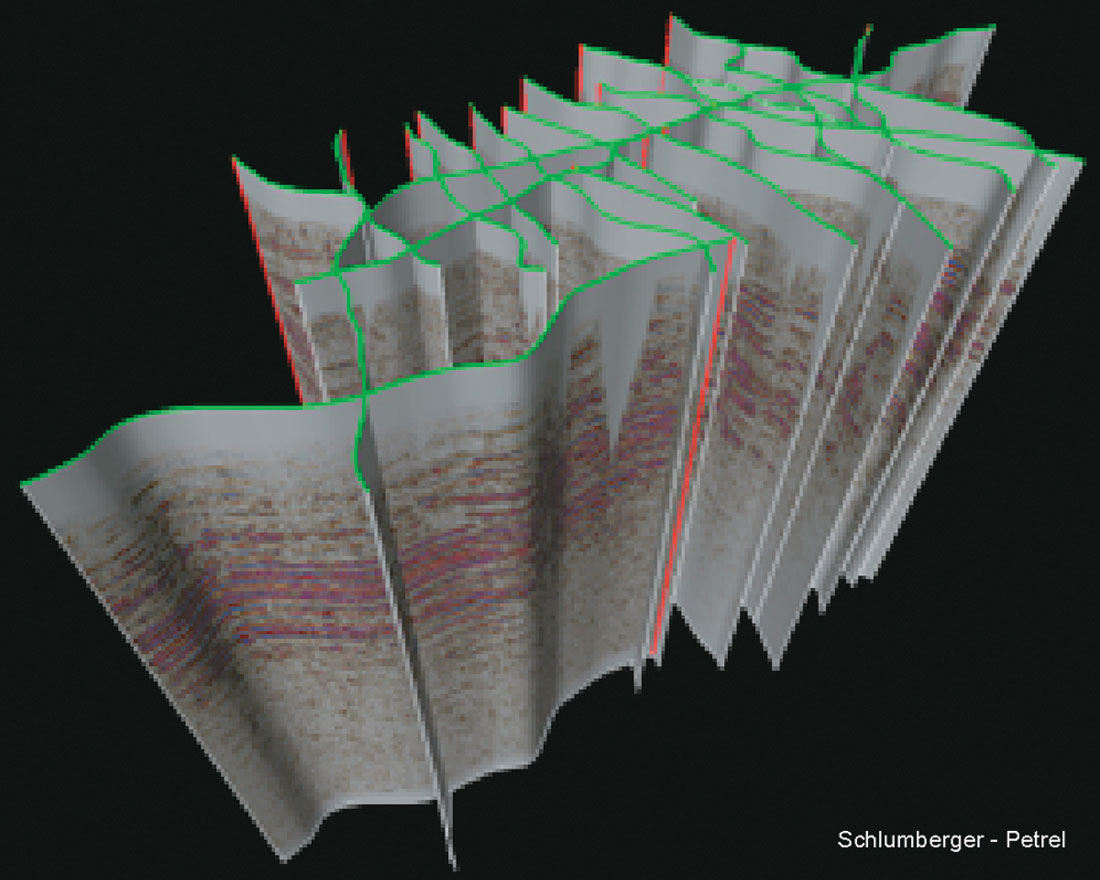

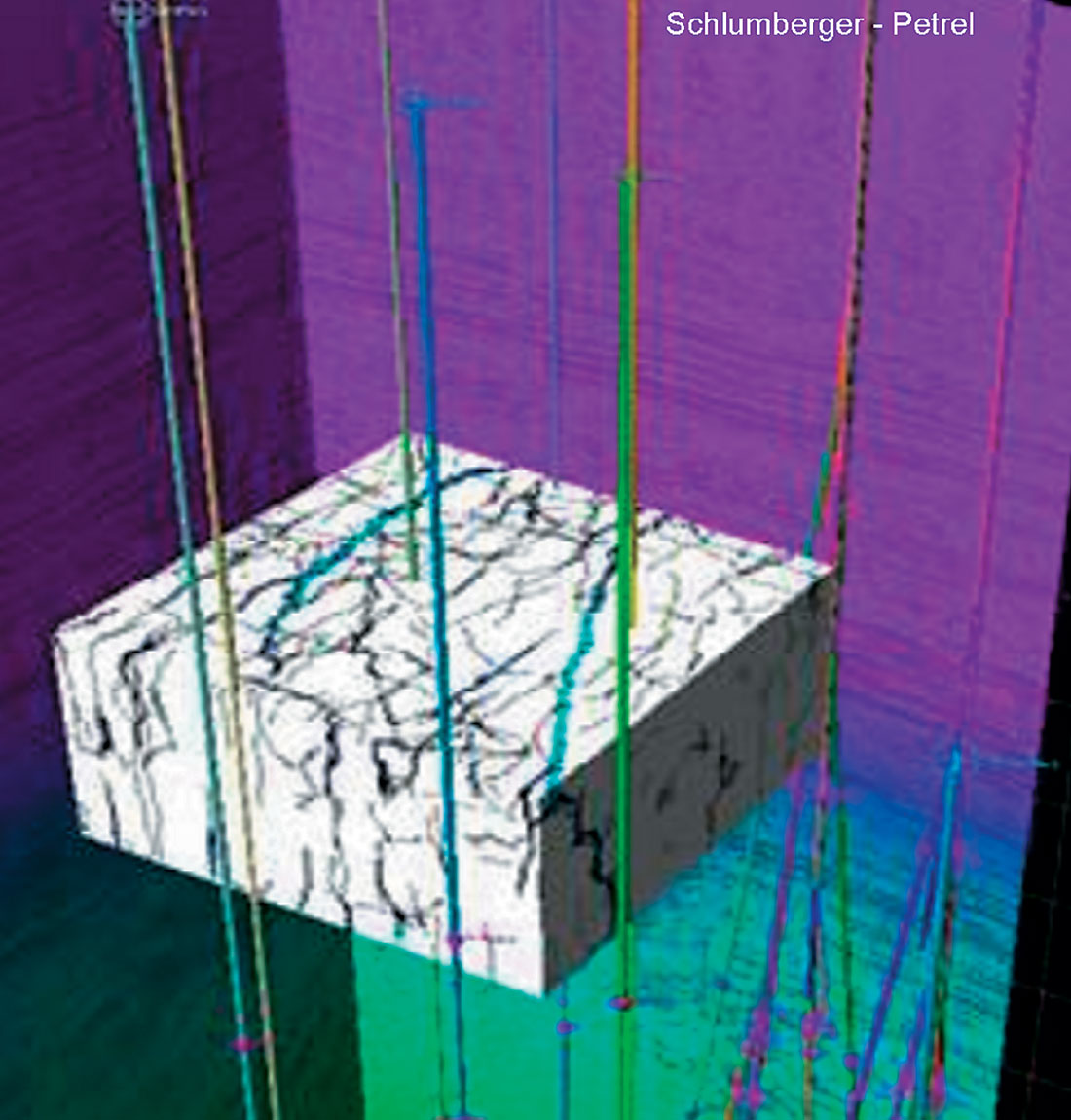

Other traditional 2D section-based data such as grids of 2D seismic data (Figure 2) may also be more easily comprehended within these visualization environments. Complex well intersections or fence diagrams can also be generated between wells within the 3D volume to more directly relate the known wellbore geology to the seismic.

3D “Volume-based” Visualization

Many interpreters are still working section-based interpretations on individual 2D-profiles, one at a time, and then producing a contoured map of the resulting line-based horizon picks.

While there are distinct advantages to performing “traditional” section-based interpretation of horizons and faults within a 3D environment, as shown in Fig. 1, these techniques differ from “volume-based” interpretation of the data.

Volume-Rendering and Opacity (Transparency) Control

3D volume-rendering is a form of 3D visualization that offers the greatest opportunities for exploring within the 3D volumes but is perhaps the least utilized of the newer techniques. This technique involves opacity control (modifying the transparency of a particular attribute) to view “inside” the 3D volume or a portion of the volume. It is a truer sense of 3D visualization.

The techniques are akin to the medical imaging technologies of CAT-scan imaging whereby a physician may filter-away data to reveal an anomalous tumor.

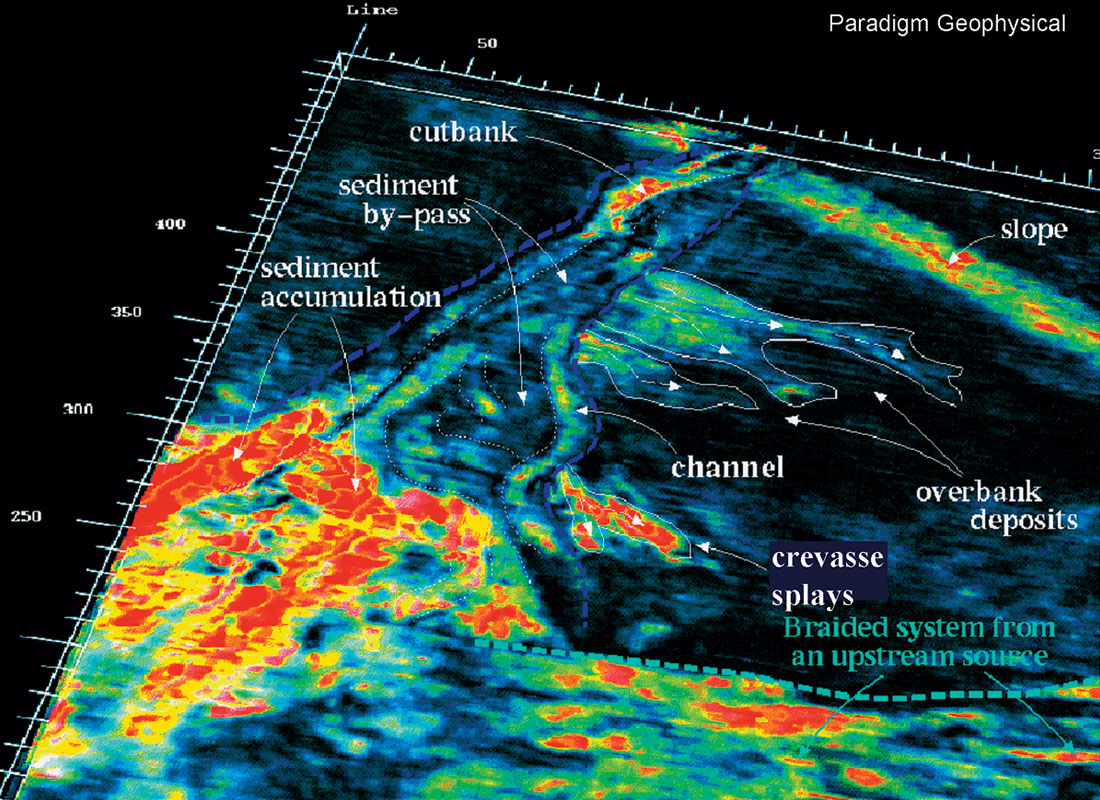

In the geoscience world, we “data mine” while volume rendering. Opacity control lets you examine the entire 3D volume at once, making voxels that are not of interest transparent, and showing those that are of interest, in their proper 3D perspective in relation to one another. Akin to finding tumors in the medical imaging technology, we may see stratigraphic depositional feature s such as channels, fans, crevasse splays, reefs or structural features and faults just by filtering the raw data and without any interpretation bias.

3D volume visualization allows the interpreter to comprehend large volumes of data rapidly and gain insights about both regional and prospect-specific geological features. Complex stratigraphic systems and complex fault systems are often much more apparent. The techniques are also useful for lead identification and prioritization.

As 3D seismic volume is rendered, perhaps using opacity control or a stacked “slab” of voxels, depositional features such as clastic channels, carbonate buildups, or areas of anomalous character such as bright spots may appear. If these objects have amplitudes differing from the surrounding host rock, one or more “seeds” may be planted within these objects for “sub-volume detection” based upon a range of amplitudes or gradient values which can be further constrained by formation boundaries, faults, or user-defined polygons.

From an interpreters’ perspective, these volume-rendered images convey more about the “geology”, both from a depositional system and structural perspective than a conventional contoured time structure map would. Visualization systems allow identification and visualization of depositional patterns far more effectively than section-based interpretations.

Sub-Volume Roaming

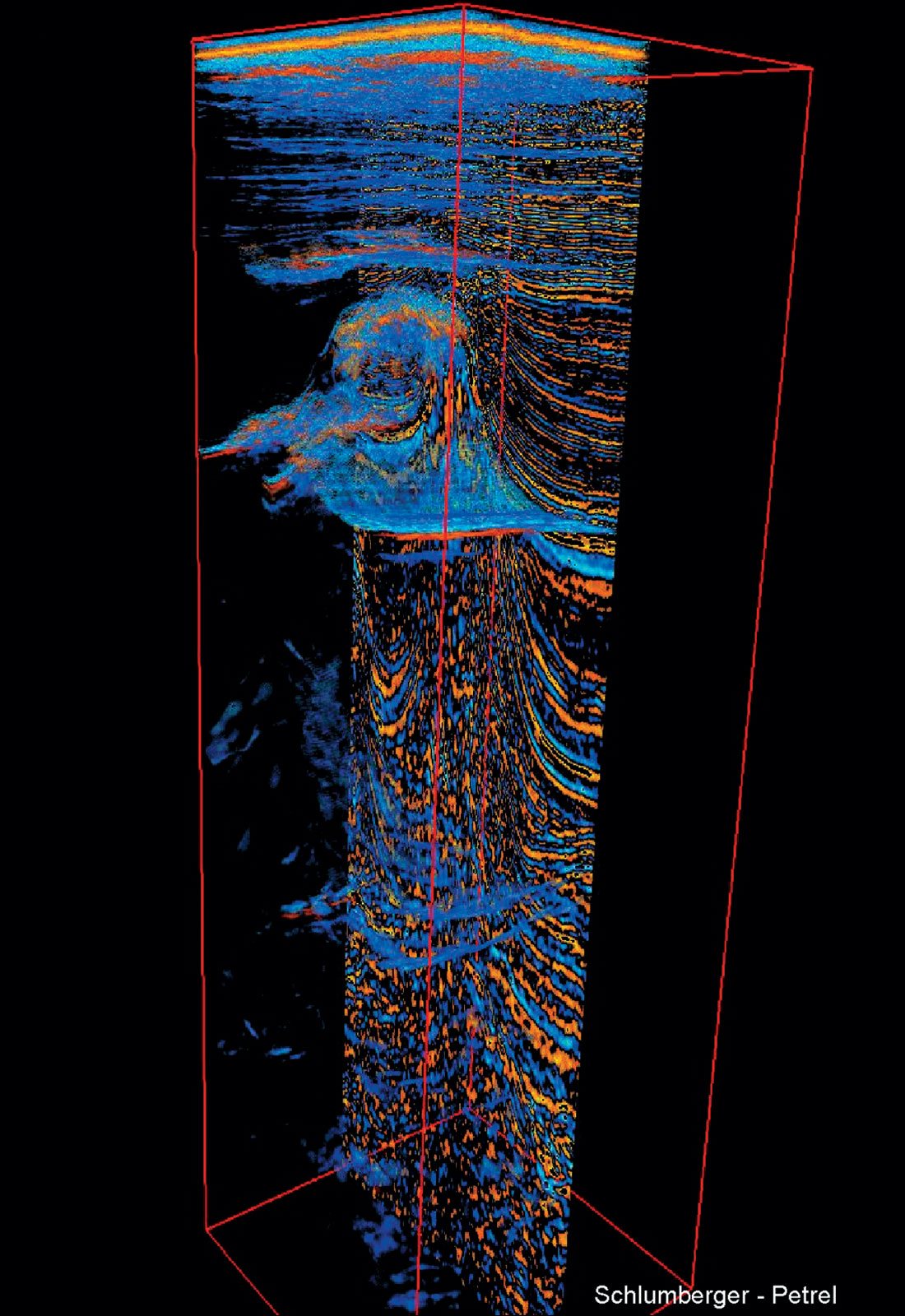

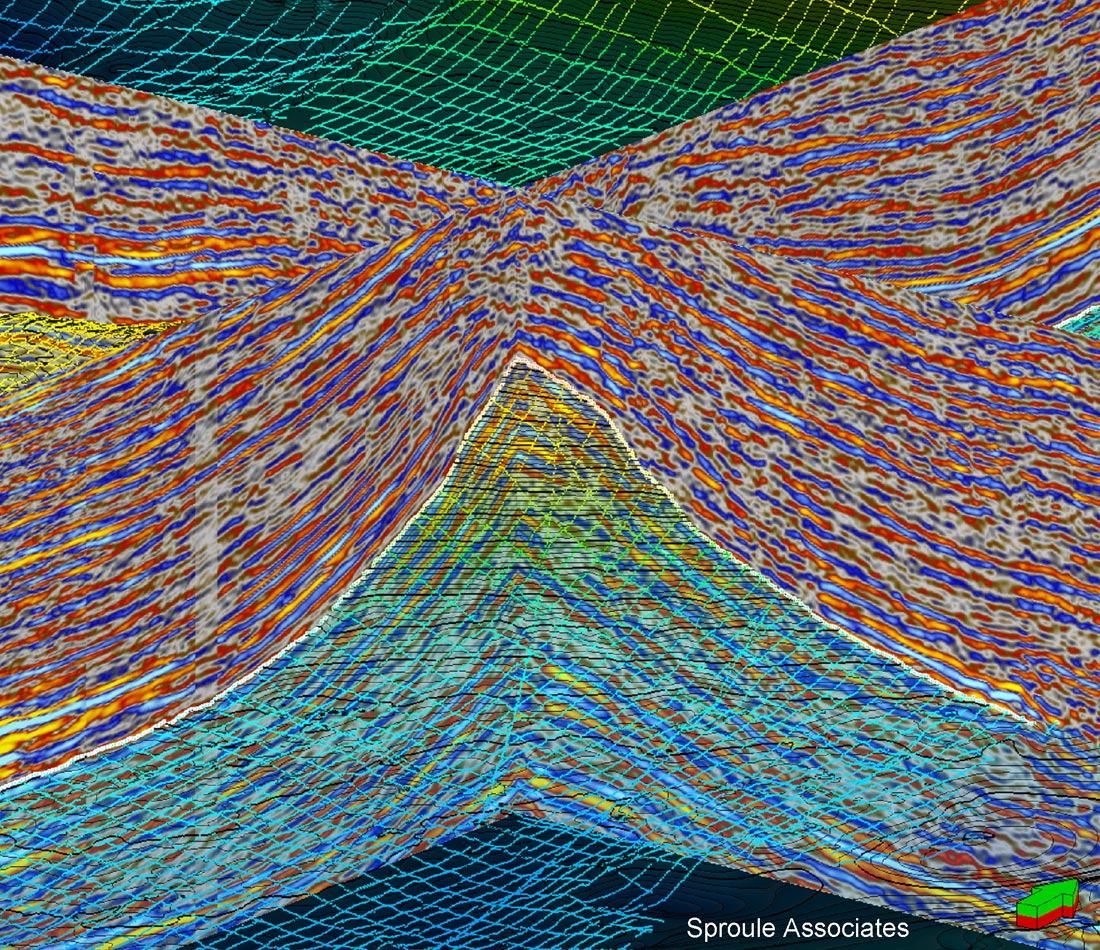

Depending on hardware limitations, the entire volume may be viewed to gain rapid insights into the regional context of amplitude anomalies (Figure 3). With larger mega-3D volumes, however, hardware resources may be exceeded.

The whole volume need not be displayed at once as this may require an inordinate amount of computer RAM memory. There is also flexibility to use other limited data through vertical stacking of time slices into “slabs” to enhance detection of anomalous amplitude bodies/features, “formation-sculpting”, “faultblock sculpting” or “sub-volume roaming” of seismic volumes to answer specific questions.

Figure 6 shows an example of sub-volume roaming where a coherency cube is being roamed through the larger 3D volume to view local faulting.

Other 3D visualization techniques, such as illumination and lighting control from low angles, can also show subtle structuring through shadows cast on the surface. Colour manipulation, in conjunction with opacity control, can be used to enhance particular attributes or amplitude ranges corresponding to a particular stratigraphic or structural feature.

Advantages of the Newer Techniques

3D visualization techniques can allow the interpreter to comprehend large volumes of data rapidly. It is useful for lead identification and prioritization, interpretation of complex fault systems, and gaining new insights into complex depositional systems and structural features which are less evident on conventional section-based displays of 3D data.

As illustrated in Figures 4 and 5, “opacity filtering” and “voxel rendering” can provide spectacular insights for both structural and stratigraphic visualization, just by filtering the raw 3D data cube and without any interpretation biases. Quite often, features which meander laterally or vertically within the 3D time or depth volume are not very apparent on inlines, crosslines, or time-slices. 3D volume-rendering is ideally suited to identifying these objects regardless of their orientation. Anomalous features can often be spotted during the voxel rendering process that would not likely be observed on conventional (inline, crossline, or timeslice) 2D displays.

It can be much faster than manual section-based interpretations to plant seeds to “sub-volume detect” a particular horizon and have the software fit a horizon through the detected voxels. Rendering through the 3D volume can then rapidly validate the resulting horizon. In one case, a client had spent months carefully picking a channel, on a line by line basis, which manifested itself as a small amplitude burst. The channel bifurcated several times and, eventually, almost became braided in character. He had the 3D software to enable sub-volume detection but did not “trust” the software to be able to follow the subtle branching of the channel. Using the opacity control features, it took only a few minutes to isolate the channel in 3D space, plant several seed points, and have the software “pick” the entire channel system. It was done in far greater detail and more consistently than the clients manual picking. Also on the same volume, “data mining” through opacity manipulation was able to quickly identify some shallower channel systems that the interpreter had not identified as weak changes in amplitude on individual 2D planes through the 3D volume.

Animating through the 3D volume with horizontal “slabs” of semi-opaque voxels works like optical stacking to help identify leads for fairly flat lying events.

“Formation sculpting” at the targeted reservoir can be used to isolate the interval between the top and base of a reservoir into a sculpted 3D volume(s) and works very effectively on more steeply dipping reservoirs. These isolated voxels over the reservoir can be further investigated through opacity control of individual or multiple 3D attributes together, or sliced in a variety of ways including proportionally between top and base reservoir to provide further insights into depositional patterns. For interpreters involved in reservoir development, this can give wonderful insights into a particular depositional system.

“Fault sculpting” allows the interpreter to sculpt the viewed 3D seismic voxels to a volume confined by one or more fault planes. This can be useful in interpretation of cross-fault leak points.

“Multi-Volume rendering” provides the ability to view multiple 3D volumes within the same coincident 3D space. Opacity thresholds and colours can be manipulated for each volume independently. An example might be imbedding the most incoherent events (faults and anomalous stratigraphic features from a coherency volume within a conventional 3D SEGY volume as a guide while interpreting.

3D visualization allows the interpreter much clearer views of complex wellbores both in the planning stage and while drilling, and the inter-relationship of multiple stacked horizons and bodies that are difficult to comprehend on section-based profiles.

Complex fault interpretation, on both 2D and 3D seismic datasets can be more readily understood by the interpreter and better conveyed to management in a 3D environment.

Problems with Adoption of Newer Techniques

Following the industry downturns in the early eighties and late nineties, when the shortage of experienced interpreters became apparent, it was hoped that these new 3D visualization tools would help the remaining workforce be more efficient at handling increasingly larger and more complex 3D volumes. Why then are these systems under-utilized?

Cost is one consideration. Hardware costs have come down dramatically and are no longer the issue. It used to be that high-end UNIX machines were required but now many of these techniques can be used on relatively inexpensive PC-based systems with a good graphics card and lots of RAM. Software costs are a likely factor though. More industry work in being done through small and mid-size companies rather than majors and the software costs to have their interpreters all using these systems may be still deemed too high by management. This thinking does seem backward, considering that the costs of drilling and even 3D acquisition costs, far outweigh the interpretation and data analysis costs.

Another factor may be lack of experienced mentors to illustrate and train operational staff who do not have the luxury of time to experiment with new technologies.

Management presentation expectations can also be an issue. Senior management may be used to reviewing flat hardcopy maps and seismic sections rather than 3D depictions of the data. 3D volume-rendered displays such as Figures 4 and 5 are fantastic at showing stratigraphic and structural details but are visual displays best shown in 3D. When you try to convey these through representation as a 2D paper plot, much of the impact is lost. Management is used to having a tangible paper map or seismic section. Much of the decision making process must change to take advantage of the far greater 3D presentation capabilities of today’s systems to convey these complex structural and stratigraphic relationships in 3D during the decision process. Some software allows you to capture animation files of “fly through” visualization imagery from your session that can be simply played back to management during the decision making process without the time, effort, and skill required to actually run the software.

When beginning to experiment with 3D voxel rendering techniques, many interpreters may have unrealistic expectations, and wish to see everything at once within a 3D view. Hardware restrictions sometimes necessitate trimmed volumes, formation or fault block sculpted views, or stacked slabs of voxels. If the interpreter is unaware of how to best create and manipulate these sub-volumes, frustrations may occur. These processes may also lead to utilizing more disk space as multiple versions of the data may be required for specific purposes. To overcome these teething problems, the interpreter needs to invest the time to learn how to manipulate the 3D sub-volumes effectively.

In some exploration plays, when a major unconformity with both truncated events below and onlapping events above must be picked, it may still require manual picking. In the presence of these cycle splits, automated picking techniques do not work effectively, or not at all, and manual interpretation on section views may be necessary. This is still best accomplished though within a 3D visualization environment. Figure 7 shows an example of such a surface, with many cycle-splits, that has been manually picked where auto-picking fails.

Some interpreters either lack the time to experiment or do not trust the newer techniques such as sub-volume detection of channels. This was the case of the client mentioned above who spent months manually picking a subtle channel line-by-line rather than the minutes it took to isolate the channel using opacity control and pick the channel system more consistently and with greater detail using sub-volume detection.

Many people are “mouse-challenged” within a 3D view. Sometimes multiple buttons must be pressed while dragging in a certain direction and this may lead to frustrations. I know I suffer from an extreme desire sometimes to want to take over “driving” when watching someone challenged by the interface.

Summary

Frankly, I am perplexed by the slow pace of adoption of many of the 3D visualization techniques, in particular, those “volume-based” techniques which offer the freedom to explore within the data cube in a new and unique fashion. This, of course, creates opportunities to find missed anomalies, not seen as significant on conventional section-based displays. After all, as interpreters, we are paid well to use our talents and all tools at our disposal to find exploration leads or better delineate known reservoirs to the best of your abilities with the current technologies at hand.

I would like to thank Schlumberger – Petrel Division, Paradigm Geophysical, and my clients for permission to include the images used in this article.

Barrie Jose

Sproule Associates Limited

Calgary

Share This Column