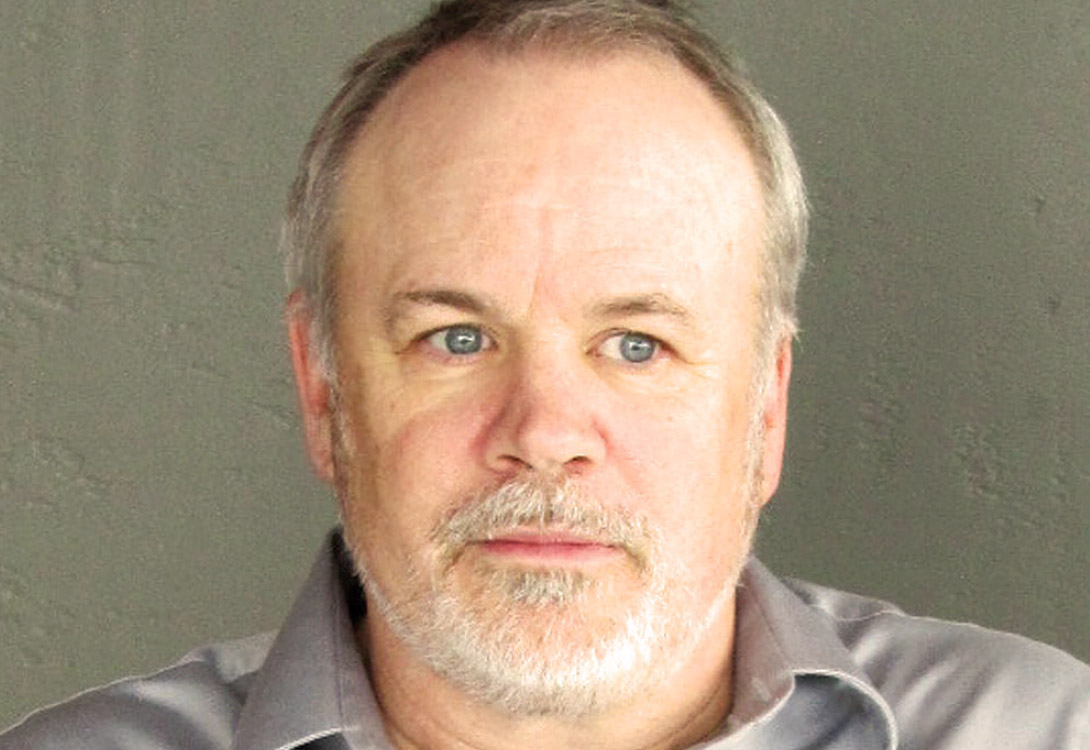

Stewart Trickett is a highly experienced geoscientist who works as Manager of Research and Development at CGGVeritas, Calgary. Though trained as a mathematician and also having studied computer science, Stewart has researched and developed geophysical processing software for the last 33 years. During this time he has worked at Veritas Seismic Ltd., Seismic Data Processors Ltd., Kelman Technologies Inc., which was later taken over by Fugro and more recently by CGGVeritas. Stewart was the chief architect of Kelman’s ‘Kismet’ system and the ‘Sage’ processing system that Veritas used for 2D and 3D land data processing. Glimpses of his contributions in different areas of geophysics emerge as one runs down his impressive list of publications. These range from surface consistent statics, phase, wavelet instability, deconvolution, noise suppression, stretch free stacking, and trace interpolation to name the prominent ones.

Stewart is a versatile technical writer and presenter. His presentation at the 2012 SEG Convention, Las Vegas was adjudged to be in the top 30 presentations.

Stewart agreed rather reluctantly to our request for an interview, as he shies away from getting photographed. Following are excerpts from the interview.

Please tell us about your educational background and your work experience.

I studied computer science and mathematics at the University of British Columbia in the late 1970s. The double honours program was more work than I could handle, so I switched to pure computer science in my final year, and graduated in 1979.

I was looking for a job as a numerical analyst, which is an expert in mathematical computation. There didn’t seem to be much call for it in Vancouver, but Calgary was booming due to the energy crisis which had sent oil prices soaring, so I spent a week there dropping off my résumé. The major oil companies weren’t falling over themselves to offer me a job, but I managed an interview with a tiny company called Veritas. Actually the receptionist sent me into the interview by mistake, thinking I had booked it beforehand, so Mike Galbraith was confused when I walked into the room. Still, I got the job as a programmer for their Aurora seismic processing system.

That must have been in the early days of Veritas – what was it like?

It was a shock. Veritas took up a couple of floors of rather seedy looking offices overtop of a bar. Programming was done using card decks and RDS 500 computers with 64 kilobytes of memory that you had to book beforehand and bootstrap yourself. Coming from a university that used mainframe computers and a file system, it was like entering the dark ages. But soon Veritas was in a new building with modern computers.

Mostly I worked on graphics software, but Mike Galbraith had conceived of this program called SPL, which stood for Signal Processing Language, and I got to develop it. SPL was a language made up of basic signal processing operations that you could insert in your processing job deck. You could design all sorts of filters and procedures without programming or compiling. Even though it was too difficult for most seismic processors to write their own SPL decks, it turned out to be immensely successful, and processors were constantly coming to me to build SPL jobs to help solve their problems. It was a great way to learn signal processing in a hurry. It became a valuable tool for Veritas researchers like Dan Hampson, Brian Russell, Rob Stewart, and Graham Millington. When Veritas leased the Aurora system to the University of Saskatchewan, I heard it also became popular among the geophysics students there. Today, of course, they would use Matlab or something similar.

You got your B.Sc. degree in computer science, worked at Veritas for some time, and then decided to go back to school. How did you choose University of Waterloo for doing your Masters?

I wanted to resume studying applied mathematics which I had suspended in my final year at UBC, so in 1982 I left Veritas to attend the University of Waterloo in Ontario. Waterloo was a young university with an excellent reputation for mathematics, computer science, and engineering. They are sometimes called “MIT North”. My thesis went well and I enjoyed doing research, but I wasn’t keen on the course work, so after earning my Master’s degree I returned to Calgary.

So you worked as a programmer at Veritas once again, from 1984-1992? Tell us about those years.

Well actually it wasn’t exactly like that. When I returned to Calgary from Waterloo in 1984 I thought I would have no problem finding a job, but the oil industry was in a downturn. I eventually joined Seismic Data Processors, or SDP, a small processing firm that used SSC’s Phoenix system. I developed a first-break picking and weathering interpretation system called Winter, which I thought worked rather well. It incorporated robust statistics rather than the usual least-squares inversion. But in 1987 the industry went into another downturn, a severe one this time, and SDP was nearly wiped out. With my salary reduced and hours cut, I realised I wasn’t going to get rich there, so I rejoined Veritas Seismic Processing.

OK, so you rejoined Veritas in 1987 then – got it! Those must have been exciting times, the heyday of Veritas was it not?

Actually, most of the Veritas programmers and researchers had moved over to Veritas Software, a software-leasing company, leaving the seismic processors poorly supported. I was hired to help fill the gap. I give Dave Robson, the main owner of Veritas at the time, credit.

He invested in software development at a time when most other centres could only complain about how tough things were.

Around 1989, Veritas Seismic realized that the Aurora seismic processing system was not going to meet their future needs, so they decided to build a new system. After some pleading on my part, I was made the chief architect. We couldn’t decide what to name it, so Wilf Reynish, the president of Veritas Seismic, declared it was to be called the Sage system, for no particular reason that I can tell. Perhaps it was his favorite herb.

It took us about two years to build a complete processing system, an astonishing feat by today’s standards. That’s more of a testimony to how far seismic processing has advanced in the last 20 years than a reflection on our programming. Sage was intended to handle both 2D and 3D land seismic equally well, perhaps the first system designed from the start to do so. The job decks were free format and the interface for each processing module was guided by a team of processors. It was written in C rather than Fortran 77, a more modern programming language which allowed us to escape from the memory constraints that were crippling seismic processing systems with arbitrary limits. And I think the results showed. Veritas dominated the Calgary 3D processing market for many years. The Sage system is still in use today, despite management’s efforts to retire older processing systems.

After all these successes, how or why did your stint at Veritas end?

Reorganization resulted in a software manager who I did not get along with, so despite my success at designing and developing Sage, I was laid off in 1992 during yet another industry downturn. It didn’t last long. I joined Kelman Seismic Processing at the start of 1993 as a programmer for their Seisrun processing system.

You stayed at Kelman for 20 years or so – well really you haven’t left, the company has just gone through ownership changes. Tell us about this phase of your career, some of the highlights.

One memorable project or initiative I recall involved surface consistent deconvolution. Myself, my boss Brian Link, and Bill Goodway, then at PanCanadian, had an idea that we could improve wavelet stability across the seismic section by quality controlling the source and receiver amplitude spectra derived from surface-consistent deconvolution. Noise should have a distinct shape on these spectra. We found that noise was pervasive. Often the only clean part of the spectrum was between 15 and 55 Hz, even on good data, leaving me skeptical about the results of surface-consistent deconvolution. This drove us to develop better methods to remove noise on prestack data, and Kelman eventually ended up with an impressive suite of tools to do so.

Another highlight was more of a computer science or programming achievement. In the late 1990s, Jim Jiao and I got tired of developing software in Fortran, and wrote a wrapper around the ancient software that allowed us to write seismic processing modules in the C++ language. C++ is object-oriented, and makes possible more modern programming methods. The goal was to make software development as simple and free of constraints as possible, and to maximize reuse.

In 2002 I was promoted to manager of research and development at Kelman. At that time we had a serious problem, as the Seisrun system ran only on expensive Sun computers and could only use 32-bit addressing. To exploit the cheap Intel and AMD machines then available, I wrote a program called Kismet which had three processing modules that read seismic traces from files, performed 3D Kirchhoff migration, and wrote seismic traces to files. It used the C++ processing modules we had written for the Seisrun system and it ran on any platform. Kismet immediately started making us money, since we could now exploit the cheaper hardware. We then hired Alan Dewar to extend Kismet so that it could run all of the C++ processing modules from the older system. Over the last ten years we’ve been completing and expanding the Kismet system, including extensive interactive graphics.

Recently your name has been associated with applications based on Cadzow filtering techniques. How did this come about?

Around 2000 I started looking at rankreduction methods for removing random noise. Rank-reduction is also known as the Karhunen-Loeve transform, truncated SVD, sub-space filtering, dimensionality reduction, and many other names. Before then, rankreduction filtering on seismic data had been done almost entirely in the time domain, and as a result one had to take heroic steps to apply it to structured seismic data. I figured rank-reduction filtering applied to complex constantfrequency slices could solve that problem. I developed a method called f-xy eigenimage filtering and proved mathematically that it could handle structured data as easily as it could handle flat data.

Although f-xy eigenimage filtering had lots of great theoretical properties, it was a weak noise attenuator and worked only in two spatial dimensions. I found a 1988 paper by James Cadzow, a former professor of electrical engineering at Vanderbilt University, that showed how to rankreduction filter in one dimension by arranging the values into a Hankel matrix. Cadzow was not the only one to discover this – it had been developed by others at the same time. I applied this method, which had previously been used in medical imaging and astronomy, to frequency slices in one spatial dimension, and it seemed better at preserving signal and removing random noise than the popular f-x deconvolution.

In 2007 I figured out how to extend Cadzow filtering to more than one spatial dimension. In retrospect it was kind of obvious, and I don’t know why it took me so long. But it was like striking a rich vein of ore, opening up all sorts of possibilities which myself, Lynn Burroughs, Andrew Milton, and others at Kelman have since developed. Mauricio Sacchi, head of the SAIG consortium at the University of Alberta, also realized these possibilities, and his students have written many papers based on it. In the last four years. Kelman and SAIG have been leap-frogging each other’s work. Rank reduction on frequency slices has been extended to prestack noise removal in up to four spatial dimensions, 5D and de-aliasing interpolation, tensor completion, erratic and coherent noise removal, and simultaneoussource deblending.

Where are things at now with Kelman in its latest incarnation?

In 2011, the geophysical division of Kelman Technologies was sold to Fugro, who were looking to improve their land processing. And in early 2013 we were sold to CGGVeritas, now renamed to simply CGG. It’s still uncertain how we’re going to fit in, as CGG already has half a dozen processing systems. This February I visited the head office outside of Paris. It was a big modern glitzy office building with the words “CGG Veritas” in bright lights on it. It occurred to me that we had come a long way from the seedy looking office overtop of a bar.

What is it that you love about our industry?

If you listen to certain political pundits, free enterprise is driven by naked greed. Unbridled exploitation. Dog-eat-dog savagery.

And it’s pure tripe. Our industry is a great example of enlightened self interest. The level of professionalism and co-operation between commercial competitors and between business and academia is amazing. People do well in this business by working with others and endlessly seeking to do things better, and rarely through some Hollywood fantasy of ruthless exploitation. And I really love that aspect.

And that will be the last of the politics.

Do you have a memorable incident from your professional successes that you would like to share with us?

In the last few years I’ve given talks in smaller geophysical centres like Pittsburgh, Tulsa, Midland, and Denver. Many of these societies have their meetings in bars, often with free drinks. I think the CSEG can learn from this. In Tulsa I was heckled, which was a first for me. I thought it was funny but it mortified the organizer. The heckler eventually dozed off, perhaps a result of the free drinks. One of the questioners was an older gentleman who seemed to know what he was talking about. He came up afterwards and introduced himself as Turhan Taner, who is one of the pioneers of computerized seismic processing.

So, you have basically developed seismic processing software, correct? Tell us about the kinds of problems you have worked on.

I’ve worked on almost every kind of land seismic processing software there is. Leaving aside any specific technical problem, the most relentless foe is complication. Needless complication wastes time, creates errors, and hinders our understanding. I’ve never bought into the idea that power and simplicity are incompatible. Any time a solution seems overcomplicated, back it up and have another run at ‘er.

What personal and professional vision are you now working towards?

Professionally my goal is to provide the best land seismic processing system possible. It’s not easy, as such a system must be simple and intuitive but at the same time have immense flexibility, capable of standing up and dancing the Macarena when needed.

According to a recent survey of 2000 people in the U.K., people aged 68 or 69 years are the happiest, as their priority in life is ‘to have fun’. What is your take on this?

I’m not sure how I’m going to take to retirement. What do I do with myself without some kind of problem to work on? There is the possibility of semiretirement, but I suspect that many people will be trying to do the same, and younger generations likely won’t appreciate a lot of geezers crowding the hallways.

Looking at your list of publications, I notice you have worked in quite a few areas of geophysics. Beginning with surface consistent statics problems, wavelet instability, various ways in which noise can be suppressed, interpolation etc. You seem to be all over the place. Your comments?

My career has been building seismic processing systems, so I’ve worked on every aspect of traditional seismic processing except for imaging. That’s a little strange given that I wrote my Master’s thesis on the numerical solution of partial differential equations, which is what migration is. The biggest theme to my research has been removal of noise, which I define as any energy on the seismic records that we don’t know how, or can’t be bothered, to use.

What according to you is your most important contribution to geophysics?

First, the design and construction of two seismic processing systems that, I think, were popular with both processors and programmers. Second, the development of rank-reduction filters on constant-frequency slices (“Cadzow filtering”) for noise suppression and interpolation. Ten years ago, the industry lacked powerful prestack random noise suppressors, particularly for structured data. I think this has helped to fill that gap.

Are there other areas of geophysics that fascinate you in particular?

Robust statistics in processing. We tend to rely on least-squares estimation for most inversion problems. But seismic data, particularly for land, often contains erratic noise which leastsquares methods do a poor job on. Even when robust statistics are used, our approaches are sometimes outdated, such as when we use least-L1-norm estimators. We also tend to perform robust inversion using iteratively reweighted least-squares, whereas a lesser known strategy called iterative pseudo observations should have broader application.

Another area is software. The industry depends heavily on software development, and yet we rarely bother to share advice on what works and what doesn’t.

What are the directions in which future R & D worldwide will be focused in our industry? Any important developments that we will see in the next five years?

Acquisition is changing rapidly. Simultaneous shooting, single-point receivers, time lapse, targeted and randomized shooting patterns, urban acquisition, multi-component and spatial-gradient recording, massive increases in recorded channels, broadband acquisition, and so on, will not only change things in the field but in the processing centres.

What do you think are 5 top technical articles that in your opinion had an impact on your thinking and which are widely hit on the website, whether it is the SEG, EAGE or CSEG? You might want to keep these to the processing of seismic data.

The most influential articles for me have been outside of geophysics. Signal processing is used in many fields, and most problems we face have been tackled in another guise elsewhere. Within geophysics, I like oldtime articles that inspired entire fields of research.

“Robust Modeling with Erratic Data”, 1973, Claerbout and Muir. They foresaw a future where almost every geophysical statistical estimation was robust. It didn’t come true, but modern robust statistical methods might still make it possible.

“Estimation and correction of nearsurface time anomalies”, 1974, Taner, Koehler, and Alhilali. This was the first geophysical paper I recall reading, and it still reads very well today. It describes surface-consistent statics, a technique now extended to deconvolution and scaling, and is a foundation for land seismic processing.

“Outer Product Expansions and Their Uses in Digital Image Processing”, 1975, Andrews and Patterson.

“Eigenimage processing of seismic sections”, 1988, Ulrych, Freire, and Siston.

These two papers demonstrate that rank reduction is a powerful and fundamental tool for noise removal.

“Earth Soundings Analysis: Processing versus Inversion”, 1992, Claerbout. This book takes a single theme – processing versus inversion – and applies it to dozens of seismic processing problems.

Have you thought of volunteering your time with the professional societies like the CSEG and the SEG?

Is that a hint? I primarily help out the SEG by peer reviewing papers and occasionally chairing conference sessions. I don’t think I’d be happy sitting in committee meetings. I can probably help more on the technical side.

What do you do first when you get your copy of the RECORDER?

The CSEG has a trade magazine? Actually I find the quality of the RECORDER to be excellent, although this interview might bring down the average. I usually go to the back pages first to see who’s moved where.

What other interests do you have? I notice you are active in yoga, skiing, canoeing, etc.

Yoga? I think you’ve confused me with someone else. I have the flexibility of a potato chip. I golf (increasingly poorly), garden, and box. Well anyway I used to box on a recreational level. I still do a boxer’s workout but I haven’t sparred for years, and if you’re not getting punched in the face, it’s not really boxing. I downhill skied a lot when I was young, as I grew up in a ski town in B.C. I now prefer cross-country.

What would be your message for young geophysicists entering our profession?

I don’t consider myself a geophysicist. I’m more of a software developer and mathematician who has carved out a niche in the geophysics community.

Still, learn to communicate. Students spend years studying mathematics and science, but almost nothing on how to get ideas across. I guess we figure communication skills come naturally, or perhaps that it’s unteachable, but this is plainly untrue. We should be studying and practicing communication as intensely as we do any other useful skill. Read books and take courses on it. Learn to write and to build presentations. Learn to speak clearly and simply, without ums and uhs, and to project your voice to the back of the room. Constantly get people’s feedback.

And second, if you profess some challenging goal for your career, more experienced hands might wisely lecture you on how tough it is and how unlikely it is you’ll achieve it. Thank them for their advice and ignore them.

Share This Interview